High-Performance Tools and Methods

As the worldwide volume of climate data is expected to increase roughly 1000-fold over the next 10-20 years, it will be imperative to make use of high performance computing (HPC) solutions in order to process and analyze this data. A comprehensive approach is needed that entails scalability of data access, scaling queries and exploration, scalable and power efficient techniques for data mining on large systems, and the use of accelerators where possible. Moreover, emerging trends in high performance systems do not benefit all stages of the scientific discovery process equally. In particular, there are widening gaps between the capabilities of HPC systems and the ability of application scientists to efficiently store and analyze the data produced by scientific simulations. As such, our project has focused on developing algorithms, software, and tools to accelerate data analysis and I/O to help bridge these gaps.

We have extended and optimized our previous work on high performance data analytics kernels to include massively parallel and scalable clustering algorithms, as well as technologies for compressing and querying huge datasets and for performing similarity searches. We have also released software for this. Finally, in addition to the published work discussed in this section, we are actively collaborating within the Expeditions project to develop HPC solutions for work described in other sections of the report, including bootstrapping methods for extreme value prediction and MRF-based abrupt change detection. A paper describing one of those HPC solutions - the one for MRF-based abrupt change detection - won the Best Paper Award at the KDD 2013 BigMine Workshop.

Highlights:

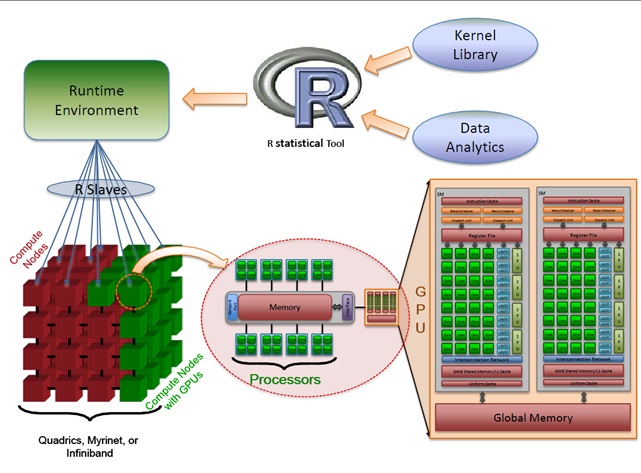

Efforts in this area are focused on creating algorithms of broad applicability: high performance analytical kernels and spatio-temporal data management tools. In Year 1-2, for kernels, we have created a library of common data mining tasks that includes k-means clustering, fuzzy k-means, and principal component analysis, implemented on GPUs using CUDA. More recently, we have expanded the kernel library to include highly scalable data clustering algorithms, including a novel k-medoids algorithm, AGORAS. These algorithms have shown speedups of two to three orders of magnitude.

Our accomplishments for kernels in Year 3 have focused on developing HPC tools for specific climate applications, as well as more broadly applicable tools for scalable data analytics. Our work on maximal a posteriori estimation using ADMM, previously used for drought detection, has demonstrated scalability to more than a thousand of processes. We've also continued work on high performance data mining kernels. In particular, we have developed scalable kernels for hierarchical and density-based clustering that are scalable to thousands of processes and released them as open-source software.

In Year 4, we also developed two methods for knowledge-priors driven community detection in large-scale networks. Mining for communities with query nodes as knowledge priors allows for filtering out irrelevant information and for enriching end-users knowledge associated with the problem of interest. To address the computational challenges associated with community detection (e.g., memory usage and computational complexity), we explored out-of-core and indexing techniques for improved memory efficiency, as well as a parallelizable partial computation strategy for improved query response time. Compared to the state-of-the-art, our techniques generally reduced peak memory usage between 100x to 1000x, and our partial computation strategy often improved query response time by 10 to 100x. Additionally, we applied these techniques to climate networks to explore associations between Atlantic hurricanes and global climate patterns.

In another project, in Year 1, we developed an approach to handle underdetermined problems, i.e., problems with many more features than data points, we developed BENCH (Biclustering-driven ENsemble of Classifiers), an algorithm to construct an ensemble classifiers through concurrent feature and data point selection guided by unsupervised knowledge obtained from biclustering. We published this work in the premier AI conference, IJCAI 2011.

With respect to data management, in Year 1-2, we have developed the ISOBAR code and theoretical performance model to provide a predictive, scalable and power-efficient implementation of lossless compression for scientific data. To support analytics-driven efficient query processing, we developed the ISABELLA and ALACRITY codes. ISABELLA supports indexing of scientific floating point data with lossy compression, whereas ALACRITY supports indexing of data with lossless compression. Both ISABELLA and ALACRITY offer significant data storage reduction and a more than 10-fold speed-up. All three packages are released as open source software and integrated into the parallel I/O framework, ADIOS, detailed below. For data management in Year 4, we developed a fast set intersection approach that couples the storage light-weight PFOR-Delta indexing format with computationally-efficient bitmaps through a specialized on-the-fly conversion. PFORDelta-bitmap approach can speed up conjunctive query answering by up to 7.7x versus the state-of-the-art approach, while using indexes that require 15%-60% less storage in most cases.

For data management in Year 3, we also developed the DIRAQ framework, which offers a parallel, scalable, in situ index-building algorithm that creates aggregated, group-level ALACRITY indexes across large spatial contexts (i.e., many simulation cores) without degrading simulation performance. DIRAQ outperforms indexes produced by FastQuery, the comparable, state-of-the-art parallel indexing technique, by 3 to 6x. Its aggregation optimizations outperform standard parallel I/O methods (MPI-IO, POSIX), in some cases by up to 10x, with superior scalability on the ANL BlueGene/P supercomputer for tests ranging from 1024 to 8192 cores. The DIRAQ paper received Best Paper Award at the HPDC 2013 conference. We have also developed PARLO, a parallel, run-time, multi-level data layout optimization framework, which integrates multiple layout optimization techniques into a single, integrated scheme optimized for heterogeneous access patterns induced by query-driven analytics. PARLO demonstrated 2 to 26x improvements on read access times versus the raw format. In Year 4, we also developed an online access pattern analysis and prefetching framework that performs incremental analysis during application's run-time, supporting various structured and unstructured access patterns and performs pattern-aware prefetching with low overhead. The proposed method have demonstrated run-time reductions up to 26% on top of less than 5% overhead in different benchmarks with various access patterns.

In Year 4, we also developed the "Transforms" framework for the ADIOS parallel I/O middleware, enabling the seamless deployment of a wide range of compression, indexing, level-of-detail and storage layout optimization technologies to application scientists, effectively overcoming the adoption barrier. We have demonstrated in situ ALACRITY indexing and in situ PARLO layout optimization through this framework in real-world applications, deployed at zero integration effort by the end-user.

In another indexing effort, we have developed an image indexing technique based on a new Locality Sensitive Hashing (LSH) scheme. The proposed LSH technique can be used for efficient image searches. When used for real data from the Defense Meteorological Satellite Program (DMSP) imagery, it has shown storage and computation improvements of up to 50%. In addition, we have developed a classification algorithm for image data using semi-supervised support vector machines, and probabilistic latent semantic analysis. Also in Year 3, we improved upon our proposed LSH approach and used it as the foundation to develop satellite image search software, named SIBRA (Satellite Image-Based Retrieval Application), which provides an interactive image search platform. SIBRA can be used to quickly find similar satellite images from large data archives for an event of interest (current image). This software outperforms linear search by several orders of magnitude. SIBRA is also user-friendly and allows users to utilize it with other multimedia items other than satellite images. In Year 4, we developed a parallel LSH capable of accelerating LSH indexing by more than 50x.

Finally, we have continued and extended our work on PnetCDF, a parallel interface for the ubiquitous NetCDF format. In Year 4, our work on subfiling has improved write performance by 36%-137% using thousands of MPI processes. This subfiling feature has been incorporated into the recent PnetCDF release, initially within 1.4.1 and now by default in 1.5.0.

People: Agrawal, Choudhary, Homaifar, Samatova